The "I Don't Know" Problem

Can Large Language Models Now Admit Uncertainty?

Understanding the boundaries of AI knowledge through uncertainty quantification

Executive Summary

While the core challenge remains, significant progress has been made in enabling Large Language Models (LLMs) to express uncertainty. Through novel training techniques like Uncertainty-Sensitive Tuning (US-Tuning) and Refusal-Aware Instruction Tuning (R-Tuning), researchers have demonstrated that models can be taught to recognize knowledge gaps and respond with "I don't know."

Key Finding:

A US-Tuned Llama2-7B model showed a 34.7% improvement in handling unknown questions and even outperformed GPT-4 in some benchmarks.

However, out-of-the-box, leading models like GPT-4 still exhibit a strong tendency toward overconfidence, often assigning high confidence scores to incorrect answers. The reliability of self-reported uncertainty is therefore low, making these new training methods and external "black-box" verification frameworks crucial for applications in high-stakes domains like healthcare, finance, and law, where acknowledging uncertainty is paramount for safety and trust.

1. The Core Challenge: Why LLMs Struggle to Say "I Don't Know"

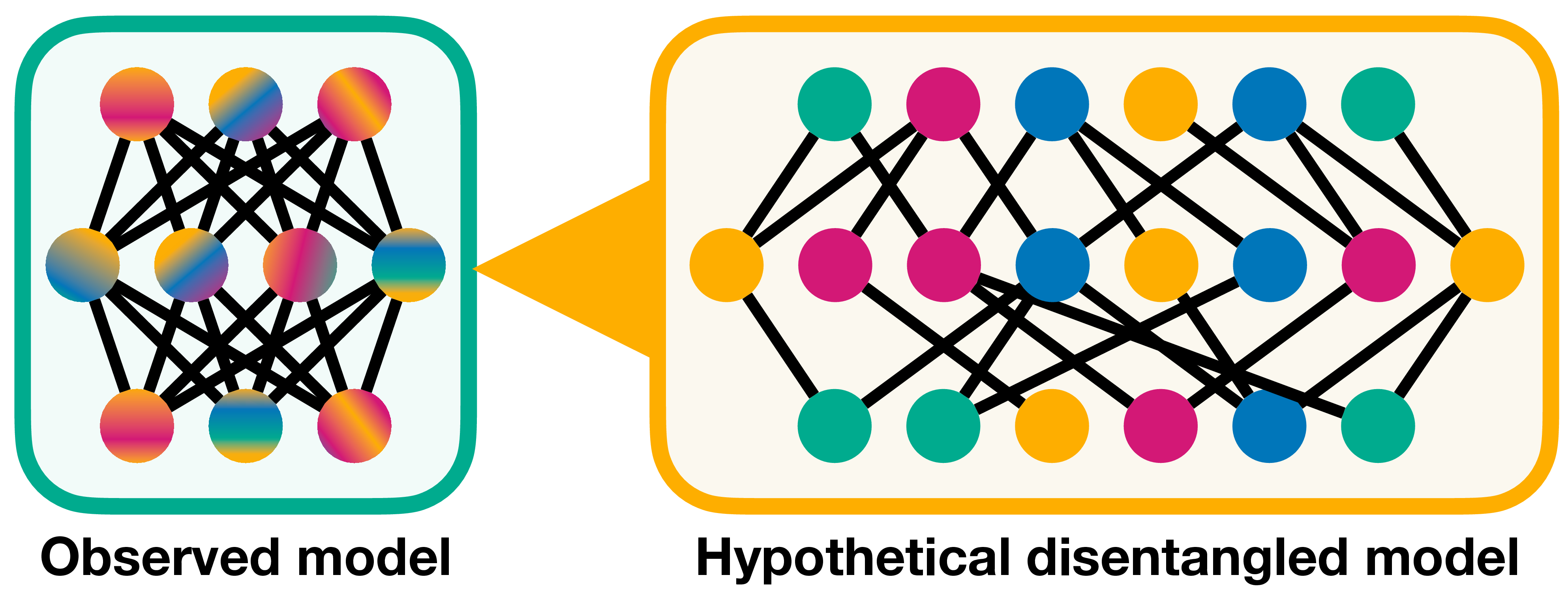

The persistent inability of Large Language Models (LLMs) to reliably express uncertainty or admit a lack of knowledge remains a fundamental challenge in the field of artificial intelligence. This issue is not merely a quirk of the technology but a deep-seated problem rooted in the very architecture and training paradigms that have enabled their remarkable capabilities.

1.1 The Root Cause: Training Data and Architecture

Training Data Bias

LLMs are trained on datasets designed to elicit specific, correct answers, creating a powerful incentive to generate responses even when uncertain or lacking knowledge [8].

Architectural Limitations

The models are built to be maximally helpful, translating into a strong bias against abstention and prioritizing answer generation over knowledge boundary awareness [104].

"The core of the problem lies in the inherent design of LLMs to be helpful, comprehensive, and confident responders, a characteristic that often backfires when they encounter queries outside their knowledge domain."

1.1.1 Emphasis on Answer Generation Over Knowledge Boundary Awareness

The training process for LLMs, particularly the instruction-tuning phase, heavily emphasizes generating complete and helpful answers. The datasets used for this purpose are typically curated to contain question-answer pairs where a definitive answer exists. This creates a strong implicit bias within the model: for any given question, there is always a correct answer to be found and provided [8] [104].

1.1.2 Inherent Overconfidence in Model Predictions

A significant and persistent issue with LLMs is their tendency toward overconfidence. Studies have demonstrated that LLMs often exhibit excessive confidence, assigning high probability scores to incorrect or fabricated answers. For instance, one study found that GPT-4 assigned the highest possible confidence score (10/10) to 87% of its responses, including many that were factually wrong [110].

1.2 Manifestations of the Problem

Hallucinations

Generation of factually incorrect or nonsensical information when the model encounters knowledge gaps.

Knowledge Boundary Confusion

Inability to distinguish between questions they can answer correctly and those they cannot.

Overconfidence Projection

Tendency to project confidence even when demonstrably incorrect, misleading users.

Key Insight

The problem is exacerbated by the fact that even when provided with explicit instructions to avoid using external knowledge, models often fail to comply, indicating a deep-seated bias towards answer generation [8].

2. Current State of LLM Uncertainty Expression

The ability of Large Language Models to express uncertainty is not uniform across different models or even across different prompts for the same model. Recent research and comparative analyses have revealed significant differences in how leading models like GPT-4, Claude 3, and Llama2 handle situations where they lack knowledge.

2.1 Comparative Analysis of Leading Models

A pivotal 2024 study by researchers Autumn Toney-Wails and Lisa Singh at Georgetown University provides a detailed comparative analysis of how leading AI models express uncertainty in the context of fact-checking [125].

| Feature | GPT-4 | Claude 3 Haiku | Llama2-7B |

|---|---|---|---|

| Uncertainty Expression (Unprompted) | Low (8% for misinformation) | High (36% for misinformation) | Low (requires tuning) |

| Uncertainty Expression (Prompted) | Moderate (19-23%) | High (50%+ adherence) | N/A |

| Self-Reported Confidence | Overconfident (87% at 10/10) | Less overconfident | N/A |

| Key Characteristic | Projects confidence regardless of accuracy | Communicates doubt and uncertainty | Baseline for improvement techniques |

GPT-4: Confidence Projection

GPT-4 shows a pronounced tendency to project confidence, even when its answers are incorrect. Without specific prompting to express uncertainty, it rarely indicated doubt in its responses.

Key Stat: Assigned 10/10 confidence to 87% of responses, including many wrong answers [125].

Claude 3: Uncertainty Communication

Claude 3 Haiku demonstrated a much greater propensity for communicating uncertainty, expressing doubt in 36% of cases when evaluating misinformation claims.

Key Stat: Expressed uncertainty 4x more frequently than GPT-4 without prompting [125].

2.2 The Reliability of Self-Reported Confidence

"The core issue with LLM self-reported confidence lies in the profound discrepancy between their verbalized uncertainty and their actual accuracy."

Verbalized Uncertainty Issues

Studies show that while prompting models to express uncertainty can improve accuracy, the models' own confidence ratings remain poor indicators of correctness.

- • Models may be "imitating human patterns" rather than genuine self-assessment

- • Insignificant correlation between accuracy and "I don't know" usage in medical tests

Numerical Confidence Problems

Self-confidence ratings rarely align with actual accuracy, with models defaulting to high confidence patterns regardless of correctness.

- • GPT-4's 87% maximum confidence assignment

- • Poor calibration between confidence scores and factual accuracy

Impact of Prompting on Eliciting Uncertainty

Research shows that explicitly prompting models to express uncertainty led to the highest accuracy rates for both GPT-4 and Claude 3 [125].

This suggests models have capacity for uncertainty recognition, but it needs to be explicitly invoked through careful prompt engineering.

3. Technical Research and Methods for Improving Uncertainty Awareness

In response to the critical challenge of LLM overconfidence and hallucination, the research community has been actively developing a range of technical methods to improve their uncertainty awareness. These approaches aim to equip LLMs with the ability to recognize the limits of their knowledge and to express uncertainty or abstain from answering when appropriate.

3.1 Uncertainty-Sensitive Tuning (US-Tuning)

A Two-Stage Training Method

Stage 1: Uncertainty Recognition

Model is trained on dataset with known and unknown questions, guided to reject unknown questions with "I do not know" responses.

Stage 2: Prompt-Sensitive Activation

Model fine-tuned to distinguish between known and unknown questions, providing accurate answers for former while abstaining from latter.

US-Tuning Performance Results

Improvement in handling knowledge gaps

Performance increase over GPT-4

Ambiguous question-context pairs in benchmark

Results from US-Tuning application to Llama2-chat-7B model [8].

3.2 Black-Box Confidence Elicitation Frameworks

Black-box confidence elicitation frameworks represent a significant area of research in uncertainty quantification for LLMs. Unlike white-box methods, which require access to a model's internal states and probabilities, black-box approaches work entirely from the model's natural language responses [95].

Prompting Strategies

Carefully crafted prompts to encourage uncertainty expression

- • "How confident are you?"

- • "Are you sure about your answer?"

- • Self-Reflect method

Sampling Methods

Generate multiple responses to measure variability

- • SelfCheckGPT

- • Multiple inference passes

- • Semantic similarity analysis

Aggregation Techniques

Compute consistency measures as uncertainty proxy

- • Semantic clustering

- • Semantic entropy

- • Pairwise similarity scoring

3.3 Other Approaches and Techniques

Refusal-Aware Instruction Tuning (R-Tuning)

Similar to US-Tuning, R-Tuning teaches LLMs to recognize knowledge limits and abstain from answering questions outside their expertise [104].

Method: Identifies "uncertain" data by comparing model predictions to ground-truth, then fine-tunes on modified dataset with "I am unsure" phrases.

[IDK] Token Introduction

Introduces a special "I don't know" token into the model's vocabulary, providing an unambiguous way to express uncertainty.

Benefits: Clear signal, reduces hallucination, consistent interpretation. Challenges: Requires careful training to prevent overuse.

| Method Category | Key Examples | How It Works | Pros | Cons |

|---|---|---|---|---|

| Training-Based | US-Tuning, R-Tuning | Modifies training process using curated datasets to teach knowledge gap recognition | Fundamentally improves self-awareness | Requires model access, computationally intensive |

| Black-Box | SelfCheckGPT, Semantic Entropy | Analyzes natural language outputs, measures consistency across responses | Works with any LLM, no model modification | Computationally expensive, indirect measure |

| White-Box | Token Probability Entropy | Uses internal states (token probabilities, embeddings) to estimate uncertainty | Direct, potentially accurate measure | Not applicable to closed-source models |

| Architectural | [IDK] Token | Introduces special token to explicitly signal uncertainty | Clear, unambiguous signal | Requires model modification and careful training |

4. Real-World Applications and Importance of Uncertainty Awareness

The ability of LLMs to accurately express uncertainty is not merely an academic curiosity; it is a critical requirement for their safe and effective deployment in a wide range of real-world applications. In high-stakes domains such as healthcare, finance, and law, the cost of an incorrect or overconfident answer can be severe, ranging from financial loss to physical harm.

4.1 High-Stakes Domains

Healthcare

Medical diagnosis and treatment recommendations require extreme accuracy and reliable uncertainty signaling.

Finance

Investment advice and risk assessment demand reliable uncertainty quantification to prevent catastrophic losses.

Legal

Case law analysis and legal counsel require precise uncertainty awareness to avoid serious consequences.

"In high-stakes domains, an LLM that can reliably say 'I don't know' is far more valuable than one that confidently provides a wrong answer."

4.2 Enhancing Trust and Reliability

Mitigating Hallucinations

Uncertainty awareness provides a viable alternative to hallucination. Instead of fabricating information, models can state when they are unsure or lack sufficient information.

This helps prevent misinformation spread and ensures more factually accurate outputs.

Facilitating Risk Assessment

Clear uncertainty signals help users identify potentially risky outputs and take appropriate action to mitigate potential errors.

Enables human-in-the-loop approaches for quality assurance and error prevention.

Improving Human-Machine Collaboration

When LLMs can admit their limitations, they create more transparent and collaborative dynamics with human partners. The LLM becomes a powerful but imperfect tool requiring human oversight, rather than an infallible oracle.

This fosters productive, trusting relationships and ensures final outputs benefit from combined human and machine strengths.

4.3 Practical Implementations

Newsrooms & Fact-Checking

LLMs that flag potentially inaccurate claims help journalists focus fact-checking efforts more efficiently.

Educational Tools

Tutoring systems that admit knowledge gaps encourage critical thinking and guide students to additional resources.

Customer Service

Chatbots that recognize query complexity can escalate appropriately, improving user experience and efficiency.